I attended the day-long Code Retreat at Lean Dog’s floating office on Sunday.

We worked in pairs for 45-minute blocks of time, building Conway’s Game of Life. In each session, we started from the beginning. We didn’t build on previous code.

After each session, teams talked about what stuff they discovered. Then we reorganized into new pairs and started working from the beginning again.

First pairing

At first I paired with a C# developer. While other teams went right into writing test programs, we spent a fair amount of time talking and sketching out ideas about how to model the game.

Then we made a grid that knew how to generate the next state just by copying itself into a new grid.

We planned to add in code to support each of the four rules of the game of life one by one.

So, we wrote a test that created a grid with just a single living cell. Then we told that grid to make a grid for the next generation, and our test verified that the single cell survived the copy.

Then we started working on the first rule: if a cell is alive and has less than two neighbors, it dies.

We burned through the reminder of time sketching out ideas on my clipboard. By the end of our time, it was clear that we only needed to pay attention to living cells and neighbors of living cells.

It was also clear that the time spent writing the first test and then writing the code to satisfy the first test did nothing to move us toward a real solution.

Second pairing

Next I worked with a Java guy. This time, we did something a little closer to TDD.

We decided to start from the point of view of an end-user firing up the game. The user would want to make a grid, add a few living cells, and then tell the grid to generate its next state.

The first code we wrote was a test and we started writing tests almost immediately. In the test, we instantiated a Grid class. That test blew up with an error, since no Grid class existed. So then we wrote a grid class. It had no methods or attributes, but that was all we needed to write in order to satisfy our test.

Next we wrote a new test that instantiated a grid and then added a single cell by calling an (undefined) addCell(…) method.

After seeing that test crash with an error, we wrote an addCell(…) method that was a no-op, because that’s all our tests required us to do.

Then we wrote a test for rule one, where a living cell with less than two neighbors dies.

In this test, we made a grid, added a single cell, and then told the grid to create a new grid that represented itself in the future. Then we used an assert to verify the new grid had no cells.

First we added a “cells” instance-level ListArray attribute to our grid class. Then we wrote a generate_next_grid method on our grid class, and all that did was instantiate and return a new grid with an empty cells.

And bingo, we had enough code again to pass our test.

At this point, I became convinced that the kind of testing we were doing was harming our productivity. We had fallen into some kind of xeno’s paradox* where we would never actually get anything done, because we could always think of some new test that applied to a trivial subset of the project, and then work on writing code to satisfy that test.

*A dude shoots an arrow at a target. Before the arrow gets to the target, it has to cross half the distance to the target. Then it can cross the other half of the distance. But now it has to cross half of the remaining distance, so it does that.

Then it has to cross half of that remaining distance, and then half of the remaining distance after that, and so on for infinity.

Since the arrow has an infinite number of partial distances to cross, the arrow will never reach the target.

Read more about it here.

I was itching to solve a real problem, rather than just watch lights blink from red to green, so I cajoled my partner into writing a test for the blinker pattern, which is when a 3×1 rectangle of cells converts to a 1×3 rectangle of cells on the second turn, and then on the third turn, converts back to a 3×1 rectangle.

So, we started talking about how to implement the rules. We decided we needed to figure out for every cell that was near a living cell, how many living neighbors it had. So we wrote a method to generate the coordinates for the eight neighboring cell to a particular cell, and wrote a test for that.

Then we were going to make a neighbor_count hashmap attribute on our grid and we were going to use that to link cells to how many neighbors each had, and then the time ran out.

At this point, I had a good feeling about the algorithms involved in solving this project.

Third pairing

Right before the third pairing, a much older fellow raised his hand and asked if anyone wanted to work together on a solution that only tracked the living cells. That’s the approach I was using, so I paired with this guy. He said he didn’t care what language we used, but he was a lousy typist. So I said I could do the typing and we could use python.

At first we excitedly talked over each other and drew pictures until we confirmed we had the same solution in mind. It turned out my partner was an old common lisp hacker, and once he realized that I understood what he meant when he talked about stuff like &rest and cadr, we got along really well.

The first bit of code I wrote was a cell class with an x and a y attribute, and a method to generate a list of eight nearby cells.

I wrote a quick test in the same file as my code for that to make sure the execution was correct.

Of course, that test failed. I had lots of syntax errors because I was writing so fast and defending against good-natured jabs about how vim was so vastly inferior to emacs.

After a few cycles of fixing typos and rerunning the tests, we got the syntax errors out of the code. Then we attacked the project of generating the next state of the grid.

I had already seen how easy it was to write a test for the blinker pattern, so I wrote a quick test for that. The test made a grid with three cells in a vertical rectangle, then told the grid to generate the next state, then it tested that in the next state, the three cells were in a horizontal rectangle.

We wrote a grid class with a dictionary attribute of living cells in the current generation and a dictionary attribute of cells that would live in the next generation.

Then we wrote the function in our grid class to generate another grid. The function did an outer loop on all the living cells, then for each living cell, it looped through all the neighbors of that living cell, and then did an inner loop of all neighbors of that neighbor, and then counted up how many were alive.

Then we implemented the four rules of the game with two if-clauses.

We ran the test again, and of course, the code was again littered with indentation errors and mismatched parentheses because of how fast I had been typing and moving stuff around.

Then the timer went off. I stayed put while everybody was gathering in the middle to talk about how everything went. Within about 30 seconds I got the last few silly typing glitches fixed, and BAM. The test passed.

I walked over to my partner and told him we solved it.

Then we listened to other teams talk. The conversation centered around naming conventions that people were coming up with, like “sustainable neighborhood” and “four horsemen”.

I raised my hand and asked if anybody had written tests for any of the patterns in game of life beyond the blinker pattern, like tests for the glider pattern, or any of the other patterns shown on the wikipedia page.

Nobody replied to that. Instead the conversation shifted to a discussion of whether or not booleans hidden behind getters and setters were a sign of a bad design.

I think it was while somebody was talking about writing tests for their (nonexistent) display code that my partner leaned over and said “This is a drunkard’s search.” Of course, I didn’t know what meant, so he explained it. Here’s the wikipedia entry:

Conducting a drunkard’s search is to look in the place that’s easiest, rather than in the place most likely to yield results. Taken from an old joke about a drunkard who loses his car keys while unlocking his car and is found looking under a streetlamp down the road because the light is better, it has been an object of consideration in the social sciences since at least 1964.

Fourth pairing

I asked if anybody wanted to see a python solution, and four of us worked together, with me doing the typing.

First I described how we would need to find the eight neighboring cells of a particular cell. So I wrote a test for that, and then wrote the code to satisfy that test.

Then once that was finished, I described what the blinker looked like, and I wrote a test for that.

Then I reused the approach that we came up with in the third pairing, and then got it working fairly quickly.

At one point I showed the code we had written to one of the people running the event, and he pointed out two things (this is roughly paraphrased, and I hope I am getting these ideas correct):

- I had four if-clauses in a single function, and each if-clause tested two independent variables. So that means there were dozens of paths through the function that would have to be tested.

- My blinker test could be satisfied by code that just hardcoded the expected results, rather than built an actual game-of-life system. My test didn’t force a complete solution.

Both points illustrate the biggest difference in the TDD mindset versus whatever it is called that I do. (design by intuition?)

The fact that I didn’t have a test for every path that I created didn’t really concern me, because I was focused on getting the blinker pattern to work. If that blinker pattern test failed, then I might go in to my code and make sure that what I wrote was really what I meant, or maybe I’d even throw it all out and start from scratch. But I wouldn’t worry about covering all corners of this version until I was confident I was on the right track.

As for the second point, I use tests to catch errors, not to tell me what to write. I wouldn’t write code that returns a hard-coded list of values just to pass a test, unless I actually thought I could solve the real problem by doing that.

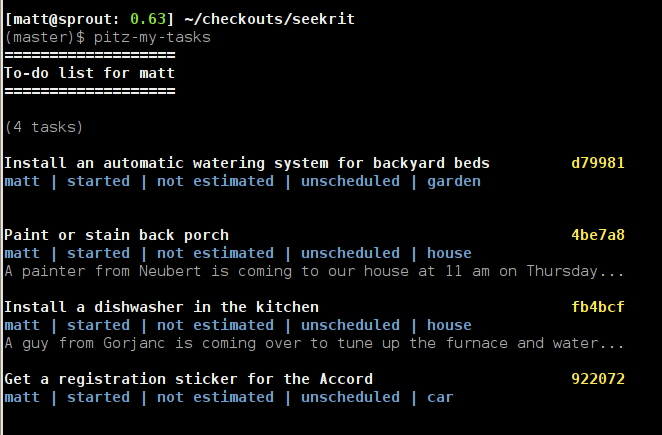

Anyhow, we got everything working pretty quickly this second time. You can see the output from my fourth pairing here.

After you download that code, you can run the tests like this:

$ python life.py

..

--------------------------------------------------------------------

Ran 2 tests in 0.001s

OK

Those two dots mean that my two tests passed. I spent the remainder of the time showing how to use the interactive interpreter and how to write doctests. I’ve tested a few of the patterns from the wikipedia page, and my code seems to do the right thing, but I’m not absolutely convinced that this implementation is correct.

Fifth pairing

I worked with two guys and we used ruby and rspec. I described the algorithm that I had used in the last two times, and we decided to work on building that.

We went with the approach of not writing any more code than was required to satisfy the tests.

So, first, we wrote some tests to instantiate a cell class, then we wrote some tests to call a neighbors method on the cell class and verify that it return a list of eight things, and then we verified that the first and last and elements in the neighbors list were at the position that we expected them to be at.

Then we started work on the grid class. We wanted to make sure that two different objects with the same values for their x and y attributes would be considered equal. But none of us knew how to redefine the == operator in ruby, and while we were researching this, the time ran out.

Again, I felt like we had fallen into the trap of slicing off trivial subsets of the real problem, and solving them, rather than attacking the real problem.

Commentary

Writing tests to check for errors (which is what I do) is not test-driven development. I daydream about solutions until I find one that I like, and then I build it, and I see if it works by writing tests to cover all the use cases I can think of.

My design comes from idle thoughts and doodles on my notepad, not from the testing.

Final note: I’ve tried my best to keep any generalizations and extrapolations out of this post. I don’t mean for this to be seen as an attack on anyone’s style of work. I’m very, very grateful for everything I’ve learned about how to write tests for software from the TDD community.